LLAMA2 13B is faster than LLAMA2 7B, according to NVIDIA benchmark!

Interesting findings on NVIDIA's LLAMA 2 benchmark results.

TL;DR ⏱️

- NVIDIA LLAMA 2 Benchmark results

- LLAMA 13B reported faster than LLAMA 7B

- Questions about the accuracy of these findings

- Seeking community insights

GenAI community/NVIDIA: I am confused! Can anyone help?

Interesting Findings 📈

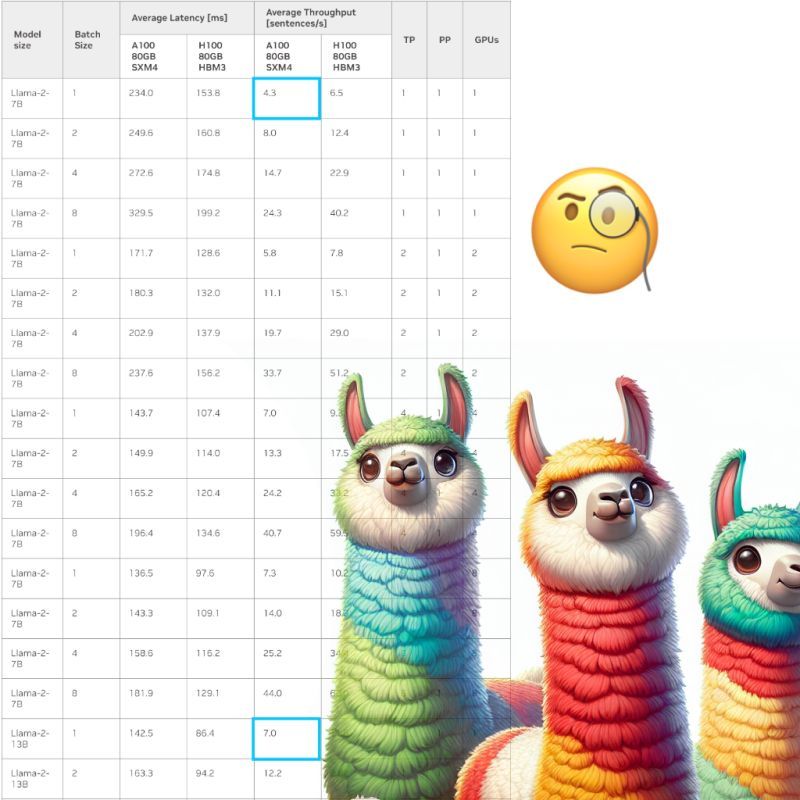

- NVIDIA LLAMA 2 Benchmark (including sentence throughput)

- Compares LLAMA-2 7B, 13B, and 70B

- Weird finding: LLAMA 13B is reported to be faster than LLAMA 7B

- Explicit Numbers: 7B Model has ~4 sentences/second throughput, 13B Model has ~7 sentences/second (LLAMA 70B ~1 sentence/second - this last one suits my expectation)

Questions 🤔

- NVIDIA NVIDIA AI, is there a mistake or can anyone else help me understand these numbers?

How we found out 📚

- Within our lovely GenAI team @Comma Soft AG, we are looking into tech details to implement the best solution

Link 📚

- Source I am talking about: https://lnkd.in/e2sUsi63 📚

Credit ❤️

- Nvidia thanks for providing benchmarks for LLAMA2

For further GenAI and ML tech discussions or such "weird" findings, reach out to me/follow me

#genai #machinelearning #llama