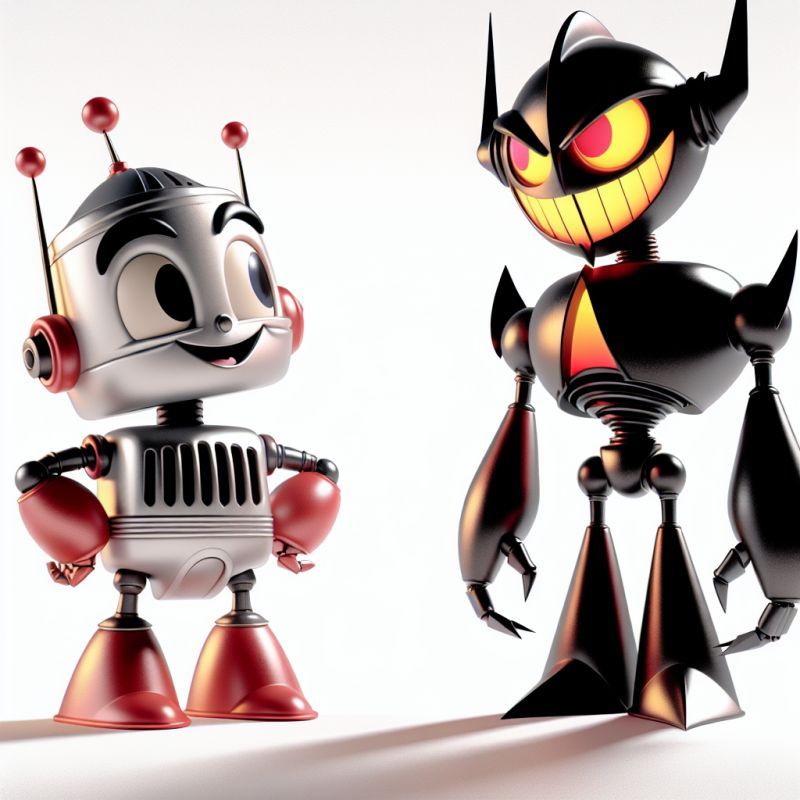

What expectations do you have regarding the values and norms of your GenAI chat assistants? Highly sensitive topic in the LLM space! My take...

Exploring the ethical considerations and expectations surrounding the values and norms embedded in GenAI chat assistants.

TL;DR ⏱️

- LLMs generate text based on training

- Alignment and finetuning influence behavior

- Ethical considerations in different languages

- Need for a holistic view on model behavior

Background Information 🤓

- LLMs are GenAI models that generate texts

- The text-generating behavior is based on the LLM's training

- Chat LLMs are trained in three phases

- Pretuning on large (filtered) text corpora

- Finetuning on, for example, instruction tuning data

- Final alignment to encourage preferred responses

Some classic methods for adapting LLM behavior 👮🏼

- Filter certain content, for example, NSFW, from the pretuning data

- Try to induce certain behaviors that we consider appropriate through alignment

- Craft system prompts in which we try to give the Chat Agent specific character traits

Possible Issues 🫣

- Would you expect different values and norms depending on the language in which you are prompting? Apart from alignment, culture and language may correlate with each other in the respective token spaces even in pretraining.

- Do LLMs map all languages and the values they contain into different or the same space?

- When using LLMs, we should not only pay attention to the performance of the models but also to the behavior (in other languages and token spaces)

- Does alignment-finetuning in one language resolve subjectively problematic behavior in other prompt languages?

- Who should decide about norms and values embedded within GenAI approaches? And do you check those details when selecting a pretuned model?

Further Reading 📖

- How to break Alignment of LLMs: https://lnkd.in/eWS-VZCD

- Issues with LLM Benchmarks: https://lnkd.in/dmBeQZ_j

- LLMs as Stochastic Parrots: https://lnkd.in/eGt_UYci

- Ethical and Sustainability Issues of KG-based ML: https://lnkd.in/ejfb4qNC

We at Comma Soft AG develop Large Language Models and GenAI pipelines with a holistic perspective on model behavior and performance. Please share your favorite papers or simply your experiences with this topic! For more AI topics outside the daily random model updates, please get in contact with me or follow me here on LinkedIn. ❤️

#LostInGenai #artificialintelligence #selectllm

- ← Previous

Be careful when you speak of Open (Source) GenAI. Why OpenAI and Meta (shouldn't) use the word Open within their GenAI efforts? - Next →

Do you differentiate AI Ethics principles between AI/ML fields like GenAI/LLMs or Knowledge Graph-based ML? How do we deal with so-called facts on the internet as training data?