These results give me hope for sustainable AI 🌱

I'm impressed by some of the recent advances in the field of "small" open-weight Language Models (LLMs).

TL;DR ⏱️

- Increased documentation supports reproducibility

- Data quality improves model performance

- Model distillation reduces hardware needs

More Documentation 📚

They're accompanied by increased documentation, as seen with efforts from Apple and Meta, which support reproducibility and reduce wasted effort and energy on less promising pre-training and fine-tuning iterations.

Data Quality as Chance 🔎

They demonstrate that a focus on data quality can improve model performance, as evidenced by Phi-3. However, it's also clear that the total number of tokens contributes to improvements, as seen with LLAMA3(.1).

Model Distillation 👩🏽🔬

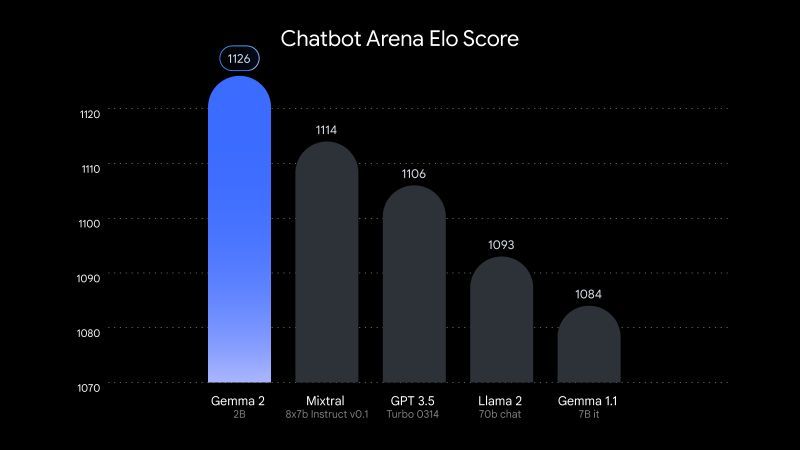

Model Distillation has been effective in reducing total hardware requirements and inference time, which saves energy and resources. It will be interesting to see how quickly distilled models like Gemma2 can outperform 6-month-old state-of-the-art models like LLAMA2.

I appreciate the efforts to decrease hardware and energy demands while still providing helpful model responses.

#artificialintelligence #genai #sustainableai #llm