What happens in a learning (AI) brain? Problems of AI Model Knowledge Editing and LLM Cut Off dates lead to more errors in question answering and worse climate footprint

Discussing the challenges of AI Model Knowledge Editing and the impact on question answering systems and climate footprint.

TL;DR ⏱️

- Knowledge editing in AI models is complex and can lead to errors.

- Re-training models from scratch has a significant climate impact.

- Alternative solutions like RAG and Knowledge Graphs have their own challenges.

How do you learn? 🙇🏽♀️👩🏻🎓

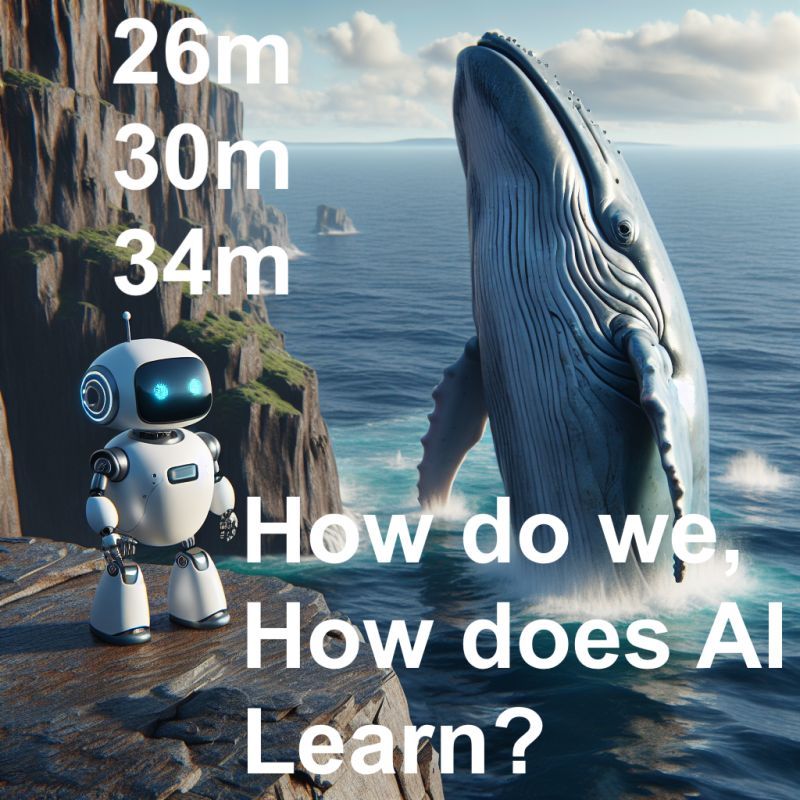

Imagine showing a friend a picture of a massive blue whale and telling them that it's the largest measured one, stretching nearly 34 meters long (German Wikipedia). They previously thought blue whales only grew up to (then well known) 30 meters (English Wikipedia). If you ask them now or in a year, they'll maybe recall the adjusted length, … or not. But were they capable to only update that fact in their brain or do they had to forget one unrelated fact e.g. one friend's name from kindergarten times. And how to find out what you lost in your mind for new knowledge?

Same issue in LLM based QA Systems: 🤖💭

This thought experiment illustrates the challenges we face when trying to update the knowledge of state-of-the-art (SOTA) language models (LLMs) with new information. These models gain their knowledge during corpus pretraining, a process that's largely opaque. But what if we want to add new capabilities to these models especially after they've already been fine-tuned and aligned?

Climate Issue without Knowledge Model Editing! 🏭🌱

It seems that currently we have to start from scratch to have stable new knowledge which requests huge amounts of NVIDIA GPU power. It also wastes spent effort for learning and training unchanged facts. I am still missing truly best practices if and how this is doable especially at scale beyond thousands of facts changing on daily bases.

RAG or Knowledge Graphs as a solution? 🛠️📚

Coming from a PhD in Knowledge Graph based ML @Smart Data Analytics which seems more appropriate for Questions answering on changing facts, I still think the Hype around LLMs need to reflect this issue. LLM-RAG is not always a solution due to Retrieval Recall issues, Hardware costs of flooded context length and many more.

Reach out! 💬❤️

We at Comma Soft AG develop novel approaches for Alan.de and our customers to tackle these issues always to get the best responses when you interact with GenAI based Question Answering Systems.

If you like to join our efforts, reach out to me for collaboration, or consider joining us #cometocomma for a team of lovely, highly productive and smart people 🥰

Share me your Thoughts!

I highly appreciate if leave me your thoughts about AI Knowledge Editing in the comments! Feel free to follow me for more details from our R&D 🙏🏽

#artificialintelligence #rag #llm #knowledgeediting #machinelearning