There is also technical foundation for thinking capability in Reasoning LLMs

Do LLMs Think – Or Is It Just Next Token Prediction? 🤔

TL;DR ⏱️

- Short explanation of reasoning models

- Technical description: where is the potential for "thoughts" in transformers

- My hypothesis on LLMs and thinking capabilities

Background

Reasoning LLMs (like DeepSeek R1, Alibaba QwQ, or OpenAI oX) are currently at the center of the AI debate. They introduce a new dimension of scaling — inference-time compute — and rely on structured reasoning techniques such as Chain of Thought (CoT). This sparked my curiosity: can we go beyond "just next token prediction" and interpret their internal behavior as a form of technical thinking?

What have I done:

I looked at reasoning models and how they operate:

- They generate additional intermediate tokens to "rethink" steps before finalizing an answer.

- Models are prompted to self-question (e.g., “wait, rethink…”).

- Some UIs hide these intermediate steps, showing only the final result.

- Inference costs are higher since more tokens are generated.

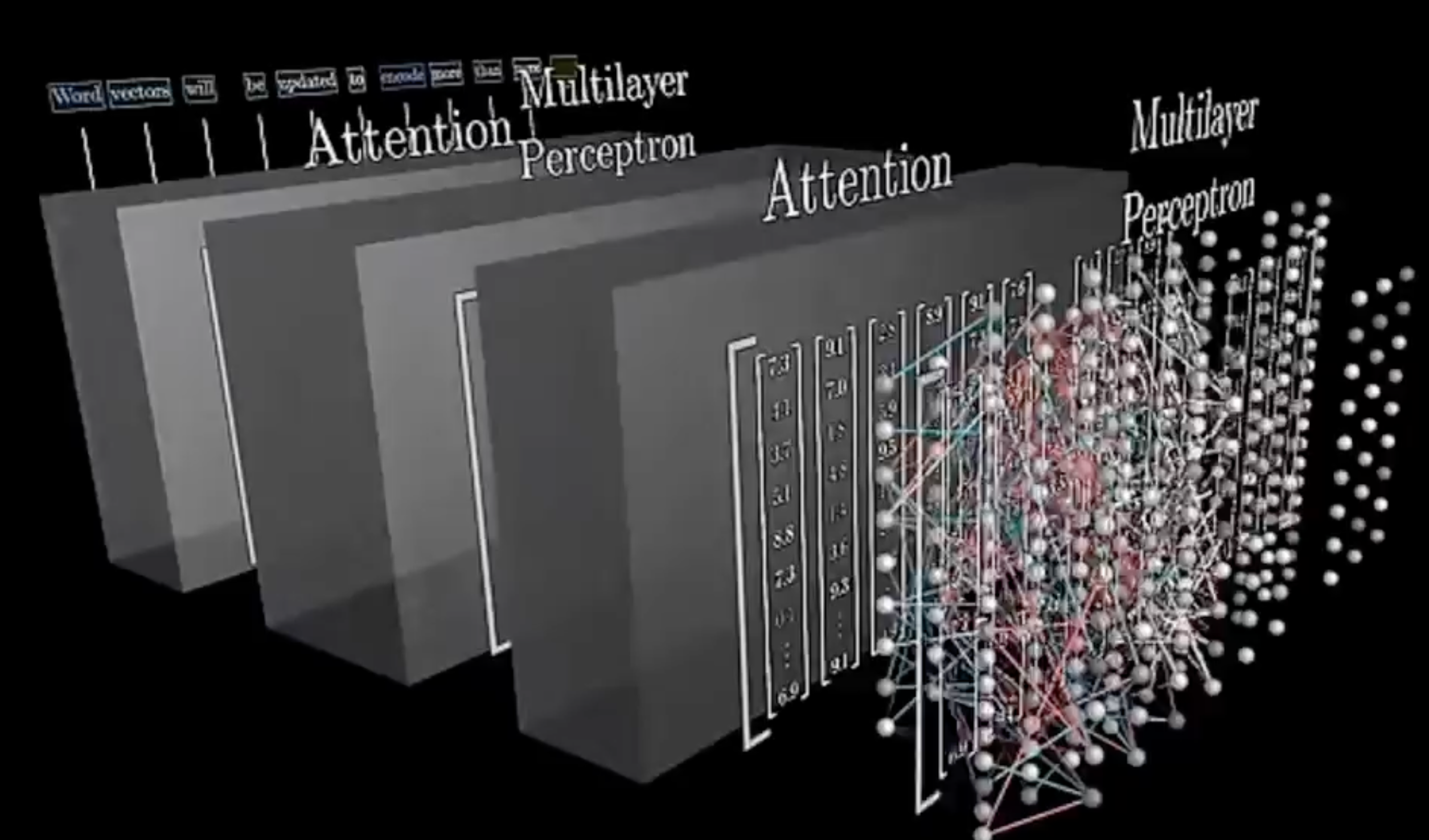

Then I explored how transformers might technically exhibit thinking-like behavior:

- Assume all possible correct token sequences for a task.

- Gather hidden representations before the LM head.

- Treat these as high-dimensional pseudo-continuous sequences.

- Normalize their lengths to define a solution subspace.

- Transformers are trained to sample in this subspace.

- Autoregressive generation traverses the space differently, but consistently within it.

- With reasoning model training (RL + stable CoT), this target space becomes more robust.

- IMHO: This is akin to "thinking" — the architecture of a solution exists before token generation; autoregression merely unfolds it.

IMHO:

I believe reasoning models are not just predicting the next token. Instead, they explore and stabilize a solution subspace. This rough “solution architecture” resembles thinking, where the model already has a structural outline before writing down the answer.

Questions I leave open:

- Do LLMs already map themselves to "thoughts" in this sense?

- Could diffusion-like sampling make responses even more coherent?

- How much of the solution space is predetermined by the first token?

I’d love to hear your thoughts! We have controversial but highly productive debates at Comma Soft AG Alan.de ML team ❤️ Big shoutout to 3Blue1Brown for inspiring visual explanations!

❤️ Feel free to reach out and like if you want to see more of such content.

#artificialintelligence #reasoning #genai #machinelearning